Meta Releases 'First Hand' Demo to Showcase Hand-Tracking Capabilities

Image courtesy of: Meta

- FRΛNK R.

- On August 5, 2022

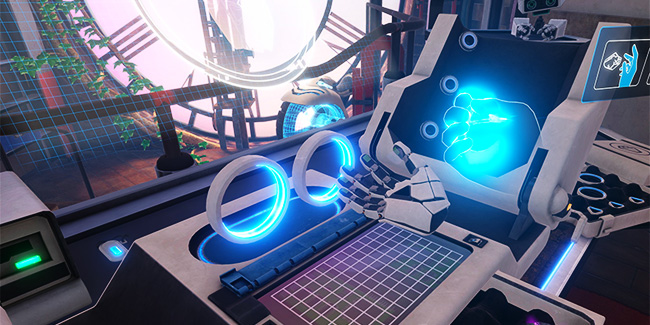

Meta announced this week the launch of a new demo called First Hand, designed to showcase the hand-tracking capabilities and variety of interaction models that are possible without the use of a physical controller to developers. According to Meta, the creation of the app was inspired by the company’s original Oculus First Contact demo showcasing Touch controller (6DoF) interactions. Although the newly released demo primarily aims to highlight the platform’s hand-tracking capabilities to developers, First Hand is available for all users to download free from Meta’s App Lab.

The demo is built using Meta’s Interaction SDK, which is part of the company’s ‘Presence Platform,’ a library of easy-to-use tools that help developers create a wide range of immersive experiences from mixed reality to natural hand-based interactions with a realistic sense of presence. Additionally, First Hand is also released as an open source project, allowing developers to access and experiment with the code so that they can utilize it to understand further how it all works and easily replicate similar interactions in their own hand-based tracking games and apps.

“First Hand showcases some of the Hands interactions that we’ve found to be the most magical, robust, and easy to learn but that are also applicable to many categories of content. Notably, we rely heavily on direct interactions,” a blog post from Meta explains. “With the advanced direct touch heuristics that come out of the box with Interaction SDK (like touch limiting, which prevents your finger from accidentally traversing buttons), interacting with 2D UIs and buttons in VR feels really natural.

“We also showcase several of the grab techniques offered by the SDK,” the company further explained. “There’s something visceral about directly interacting with the virtual world with your hands, but we’ve found that these interactions also need careful tuning to really work. In the app, you can experiment by interacting with a variety of object classes (small, large, constrained, two-handed) and even crush a rock by squeezing it hard enough.”

To help get started, Meta also shared 10 tips for developers looking to utilize and take advantage of the Interaction SDK to build great hands-driven experiences for the platform. Check out the official developer’s post for more details.